- How biology scaled, with a break for humanoids.

- Source (h/t Ilya Sutskever).

Misc

Miscellaneous is often where the gems are.

Bird Flu Risk Monitor

- A site that tracks the risk bird flu becomes the next COVID.

- Currently little chance (8%).

Jensen Huang

- “Both Huang’s aunt and uncle were recent immigrants to Washington state; they accidentally enrolled him and his brother in the Oneida Baptist Institute, a religious reform academy in Kentucky for troubled youth,mistakenly believing it to be a prestigious boarding school. Jensen’s parents sold nearly all their possessions in order to afford the academy’s tuition […]

- “[He] was frequently bullied and beaten. In Oneida, Huang cleaned toilets everyday, learned to play table-tennis, joined the swimming team, and appeared in Sports Illustrated at age 14. He taught his illiterate roommate, a “17-year-old covered in tattoos and knife scars,” how to read in exchange for being taught how to bench press. In 2002, Huang recalled that he remembered his life in Kentucky “more vividly than just about any other”

- Source.

Justin Wellby

- Some people have the most fascinating backgrounds.

- The outgoing Archbishop of Canterbury lived an interesting life. His mother was Churchill’s personal secretary, married a businessman, had an affair with Churchill’s other secretary, and Justin was the result (he didn’t learn this until he was 60!). He went to Cambridge, had a mystical experience that converted him to Christianity, worked for an oil company in Nigeria for 11 years, then quit to get ordained as a priest. He says he can speak in tongues – “It’s just a routine part of spiritual discipline – you choose to speak and you speak a language that you don’t know, it just comes” – and has written a book called “Can Companies Sin?”. He lost his archbishop position last month for the most stereotypical possible reason – failed to investigate sex abuse by a church leader who was a friend of his.

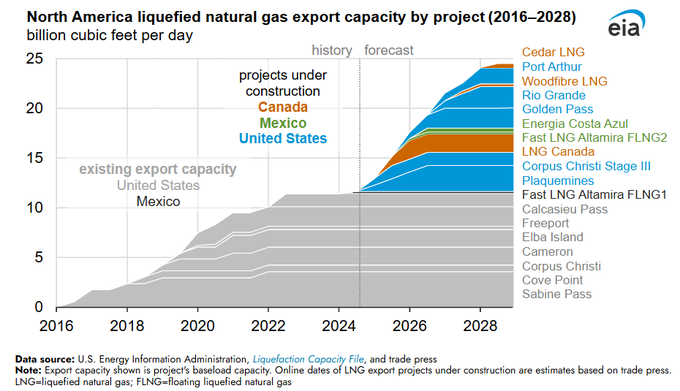

North American LNG

- North American LNG export capacity is about to see another surge over the next 3-5 years as new projects come online.

- “North America’s liquefied natural gas (LNG) export capacity is on track to more than double between 2024 and 2028, from 11.4 billion cubic feet per day (Bcf/d) in 2023 to 24.4 Bcf/d in 2028, if projects currently under construction begin operations as planned.“

- Source.

Prediction Markets as Info Finance

- Prediction markets continue to develop beyond headline bets like elections.

- This essay looks at further use cases.

- For stock investing it could be an interesting addition to start to dissect a single price that captures everything about a company into individual events/risks – from the macro to the micro (CEO change for example).

- This gives investors more granularity and a way to manage risks.

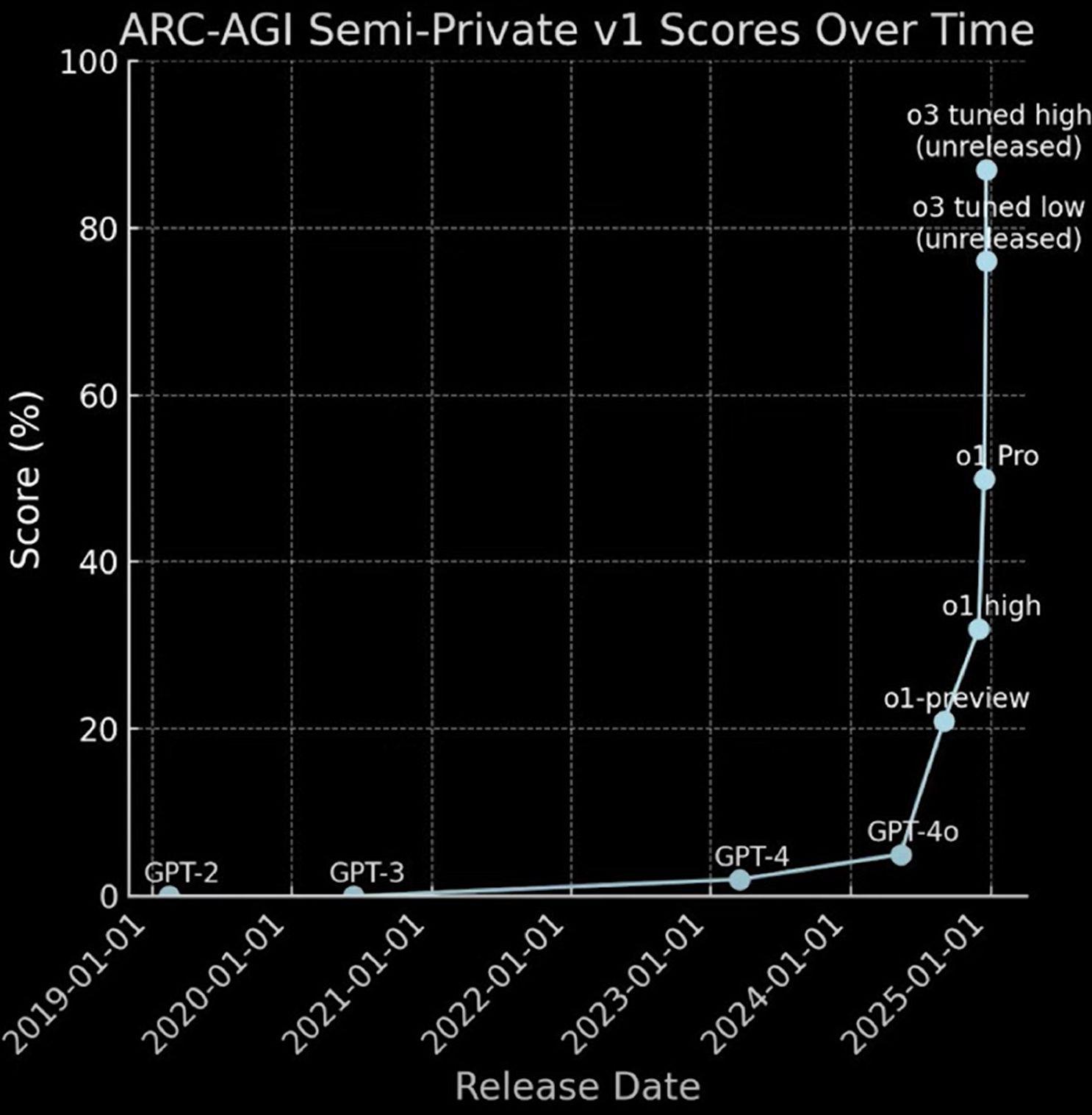

Is AI Progress Accelerating?

- “OpenAI’s new o3 system – trained on the ARC-AGI-1 Public Training set – has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.“

- “This is a surprising and important step-function increase in AI capabilities, showing novel task adaptation ability never seen before in the GPT-family models. For context, ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o. All intuition about AI capabilities will need to get updated for o3.“

- Source.

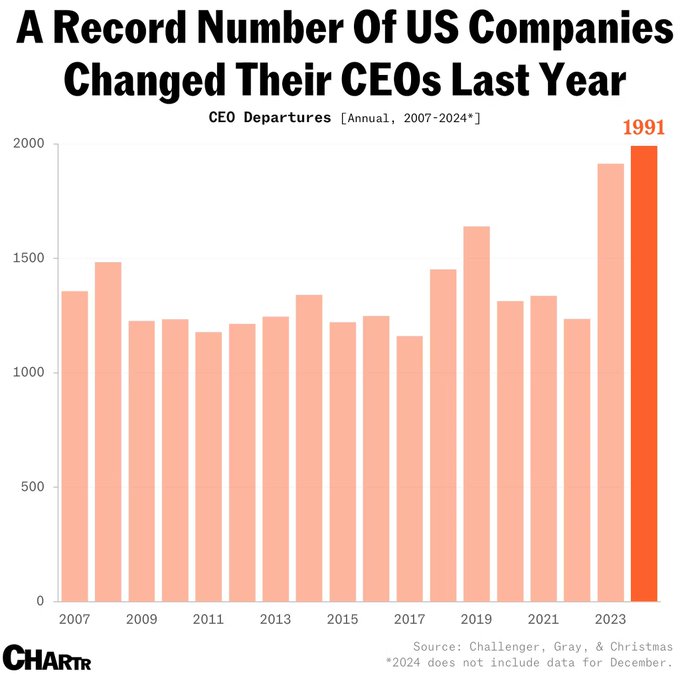

CEO Change

- 2024 stood out as a year where a record number of CEO changes took place.

- Source.

Another 52 Things List

- “AI produces fewer greenhouse gas emissions than humans! Humans emit 27g of CO2 in the time it takes to write three hundred words. ChatGPT, however, performs the same task in 4.4 seconds and produces only 2.2g of CO2. “

- “On average, spouses in the United States have genetic similarity equivalent to that between 4th and 5th cousins.“

- “People know whether or not they want to buy a house in just 27 minutes, but it takes 88 minutes to decide on a couch.“

- Full list here.

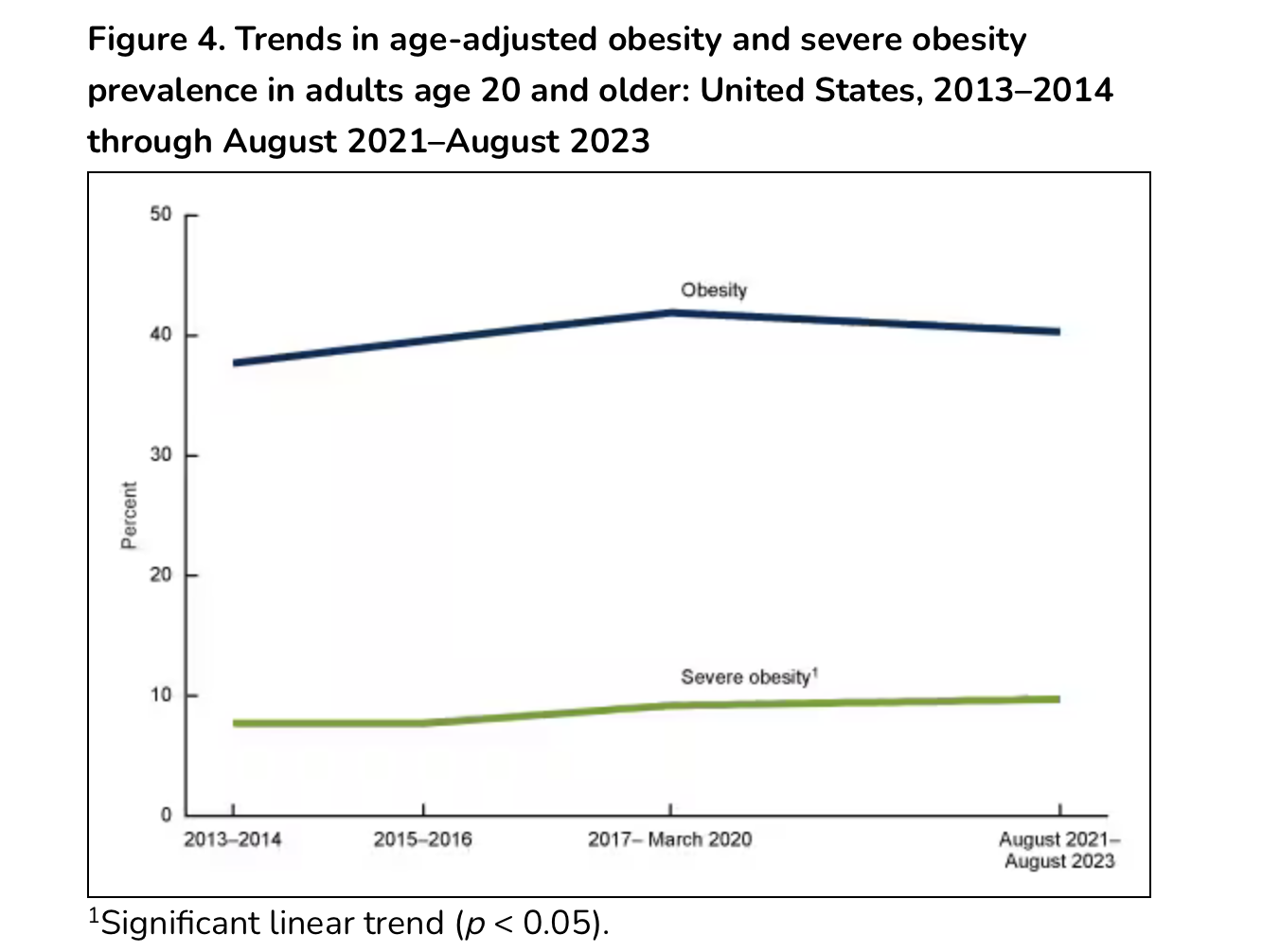

Peak Obesity?

- The press has been infatuated with the latest CDC mean estimate of obesity prevalence in the US ticking down (here and here).

- What they miss is this line from the CDC – “Changes in the prevalence of obesity and severe obesity between the two most recent survey cycles, 2017–March 2020 and August 2021–August 2023, were not significant.“

- In contrast, severe obesity rates continue to climb and this result is significant.

- Source.

Trading Game

- What if you knew the cover of the WSJ on a given day – would you be able to make money?

- Try it.

Subtle Costs

- Productivity statistics never fully capture hanging around or underemployment – both vital for the functioning of the knowledge economy.

- As a result, are we overestimating the impact of AI?

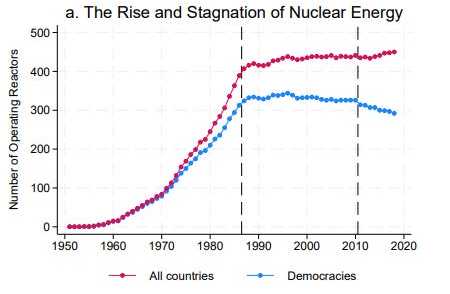

Chernobyl’s Legacy

- The Chernobyl disaster killed 60 people and an estimated 60,000 from radiation poisoning.

- However, abandoning nuclear led to 4 million deaths from air pollution as the world moved to coal-fired power for base load.

- Source.

Transformation of Capital Markets

- Ken Moelis thinks the financial crisis changed the face of transaction banking and capital markets.

90 Facts

- Almost 75% of companies paid a dividend in the late 1970s; it declined to 35% in 2021.

- Only 7% of people made it through Novel Prize economist Daniel Khaneman’s Thinking Fast and Slow.

- More here.

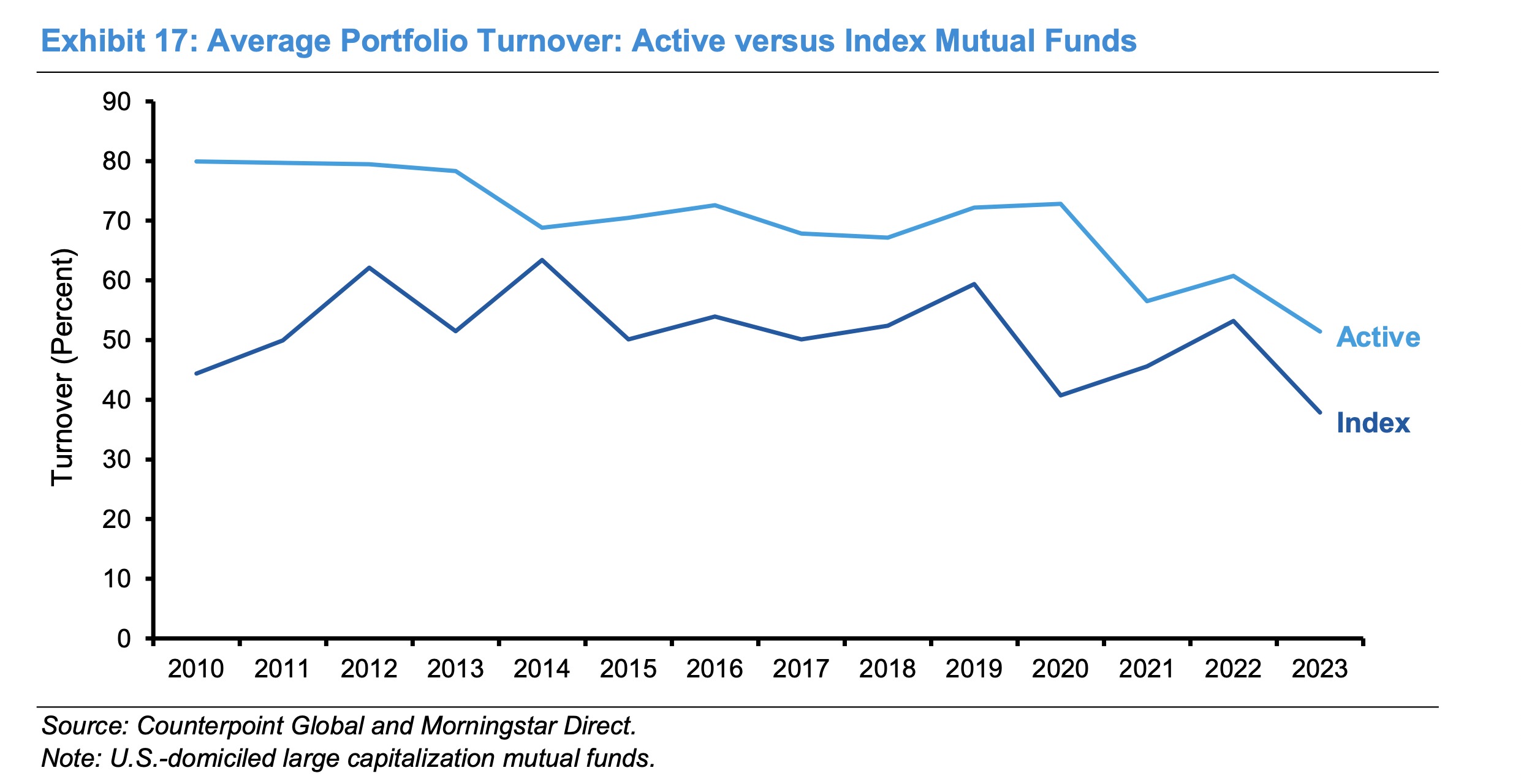

Declining Turnover

- Active manager portfolio turnover has been declining.

- Source.

Natural Diamond Prices Have Been Falling

- Supply (see slide 11) is forecast to fall over the long run.

- Source.

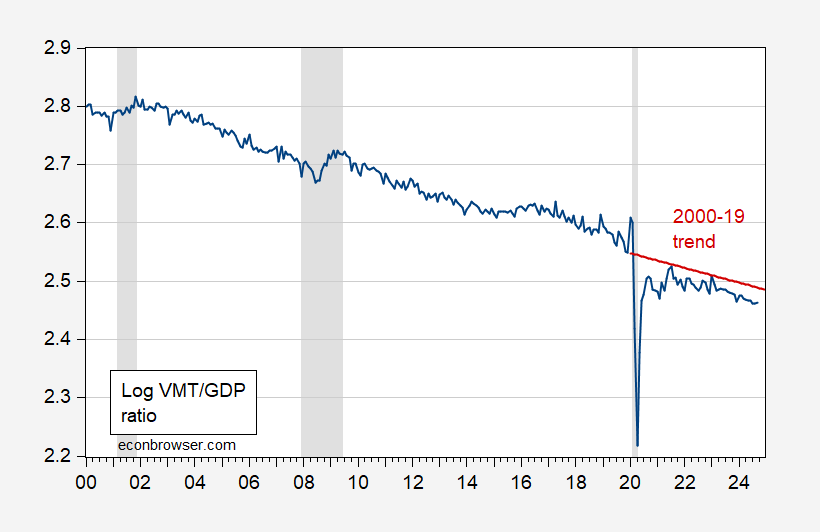

Miles Driven to GDP

- The miles-driven intensity of GDP has been declining.

- Source.

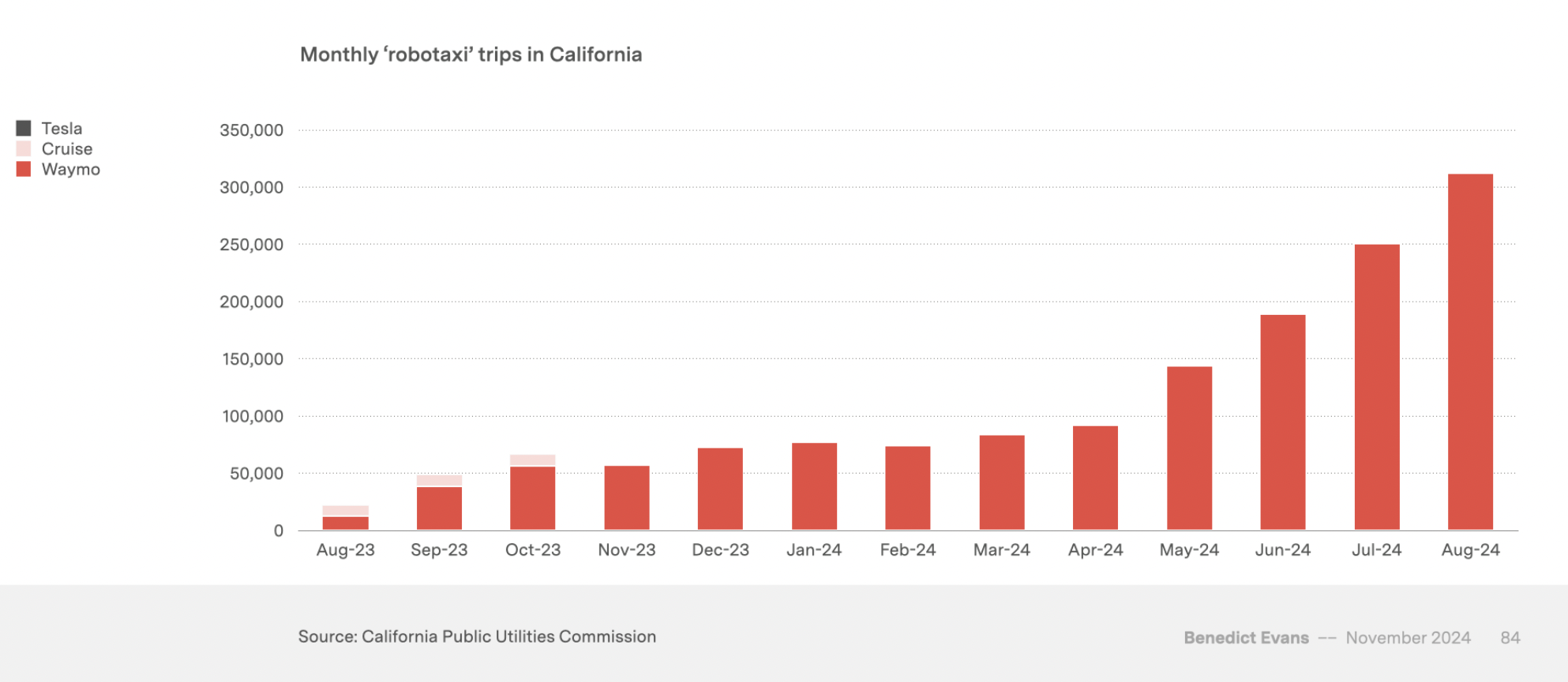

Robotaxis

- First comes the hype, then the disappointment, then it becomes reality (well some way to go).

- Source.

52 Things I learned 2024 Edition

- The list returns.

- “People whose surnames start with U, V, W, X, Y or Z tend to get grades 0.6% lower than people with A-to-E surnames. Modern learning management systems sort papers alphabetically before they’re marked, so those at the bottom are always seen last, by tired, grumpy markers. A few teachers flip the default setting and mark Z to A, and their results are reversed.“

- Ozempic seems to be changing the second hand clothes market, creating a surge in plus-size women’s apparel sales. Size 3XL listings have doubled over the last two years